Azure Reader Architecture

As i promised you before i will explain the Azure Reader application, a sample RSS reader based on the azure platform, in this post i will walk you through the application architecture and code.

let’s start by describing our data model which is fairly simple, we have a table for storing the Feeds (ID and URL), and we have a table FeedsSubscriptions for storing the users’ subscriptions to the feeds, feeds items are stored in the FeedsItems table where we store the different properties of the feed item like title, URL, author, publish time, etc., the actual content and summary of the feed item is not stored in the database directly instead the content is stored in windows azure blob storage and is referenced in the table using the ContentBlobUri and SummaryBlobUri fields.

the application has three roles, 1 web role and two worker roles

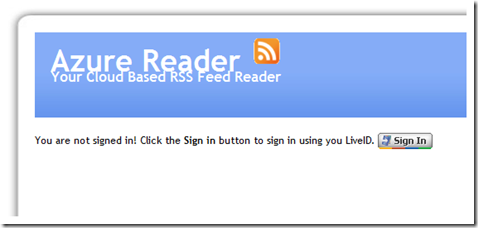

- the azure reader web role: this is the asp.net application that allows the users to login, subscribe to feeds, and view the new items.

I use windows LiveID for authentication

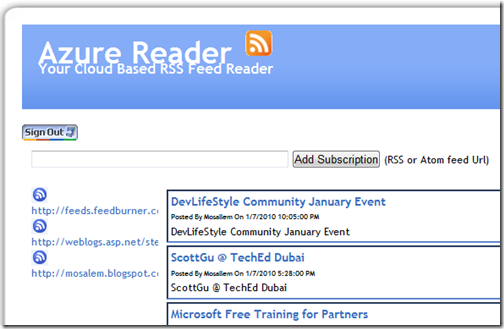

a list view on the left displays the list of feeds that the user is subscribed to, and by clicking of any feed another list view on the right displays the items of this feed, the user can add new subscription by supplying the feed Url (Atom or RSS) in the text box

the web application communicates with the SQL azure database only, we can make use of windows azure scalability by increasing the number of instances of this web role.

- the feeds aggregator worker role: this worker role is responsible for periodically reading the feeds Urls from the database and sending each feed Url as message to an azure queue, this worker role acts as the Job Manager (see Job Manager Workflow Pattern)

while (true){try{using (AzureReaderDataContext db = new AzureReaderDataContext()){//get feeds urls from the table and put them in a queueif (FeedsQueueManager.IsFeedQueueEmpty()){string[] feeds = (from s in db.FeedSubscriptionsselect s.Feed.FeedUrl).ToArray();for (int i = 0; i < feeds.Length; i++){FeedsQueueManager.AddFeedToTheQueue(feeds[i]);}}}}catch (Exception ex){Logger.LogException(ex);}Thread.Sleep(30000);}

- the feeds reader worker role: this worker role is responsible for the actual retrieval of the feeds items, the feeds reader periodically retrieves a message from the queue that contains the feeds Urls, then retrieve the items of this feeds and store the feeds items in the database, this role should have multiple instances to increase

while (true){CloudQueueMessage feedMsg = null;try{using (AzureReaderDataContext db = new AzureReaderDataContext()){feedMsg = FeedsQueueManager.GetFeedFromQueue();if (feedMsg != null){string feedUrl = feedMsg.AsString;Feed mainFeed = (from f in db.Feedswhere f.FeedUrl == feedUrlselect f).FirstOrDefault();if (mainFeed == null){FeedsQueueManager.DeleteFeedFromQueue(feedMsg);continue;}using (XmlReader reader = XmlReader.Create(feedUrl, new XmlReaderSettings() { ProhibitDtd = false })){SyndicationFeedFormatter formatter = SyndicationFormatterFactory.CreateFeedFormatter(reader);formatter.ReadFrom(reader);SyndicationFeed feed = formatter.Feed;CloudBlobContainer feedItemsContainer = BlobManager.StoreFeedInfo(feedUrl, feed.Title.Text, feed.Description.Text);foreach (SyndicationItem item in feed.Items){string feedItemUrl = "";if (item.Links.Count > 0)feedItemUrl = item.Links.Where(l => l.RelationshipType == "alternate").FirstOrDefault().Uri.ToString();elsefeedItemUrl = item.BaseUri.ToString();var fi = (from f in db.FeedItemswhere f.FeedItemUrl == feedItemUrlselect f).FirstOrDefault();if (fi != null){if (fi.LastUpdatedTime != item.LastUpdatedTime){UpdateItemBlobs(feedItemsContainer, item, fi);}}else{AddNewFeedItem(db, mainFeed, feedItemsContainer, item, feedItemUrl, fi);}db.SubmitChanges();UpdateUsers(fi, feed.Title.Text, db);db.SubmitChanges();}}}}}catch (Exception ex){Logger.LogException(ex);}finally{if (feedMsg != null)FeedsQueueManager.DeleteFeedFromQueue(feedMsg);}Thread.Sleep(5000);}

This is the basic idea of the application, you can download the code from CodePlex http://azurereader.codeplex.com/

Comments